2021.07.07

TensorFlow Serving on GKE and GS with Terraform

こんにちは、次世代システム研究室のN.M.です。

This post assumes the reader has basic knowledge of Kubernetes, GKE, GS, TensorFlow Serving and Terraform.

In my last post, I created a custom TensorFlow Serving image for the RepNet Model. This was running on Google Kubernetes Engine (GKE) and I managed it with the kubectl command-line tool. This had a couple of pain points for deployment.

- Every time I made a change to my model I would need to rebuild my custom image and then re-publish it to the Google Artifact Registry Docker repository. TensorFlow Serving can be invoked in a way that specifies external model data, using a Google Storage URI. By using this functionality and storing my RepNet model separately on GS, you can deploy new model data without having to re-build, re-publish, and re-deploy the TensorFlow Serving image.

- Spinning up a new deployment was not as easy as I wanted. I used a mix of tools to create the cluster, configure

kubectland then deploy. I wanted to explore using Terraform to manage a Kubernetes deployment, so I could spin up a cluster and then tear it down it as easily as possible.

Separating the model data from TensorFlow Serving

First, let’s look at the requirements to separate the model data from the TensorFlow Serving image. The command for invoking the vanilla image and specifying a model location is as shown below:

docker run -d -p --port=8500 --rest_api_port=8501

--model_name=${MODEL_NAME} --model_base_path=${MODEL_BASE_PATH}/${MODEL_NAME}

The --model_base_path option tells docker where to look for the model data. TensorFlow Serving expects the model data to be in the Saved Model Format under /models/${MODEL_NAME} in the container file system, or it can also handle a GS URI in the form gs://${MY_MODEL_BUCKET}/{MY_MODEL_NAME}.

Note that for GS the actual model data needs to be in a version numbered directory below model_base_path. So in this example, my model data would be in gs://${MY_MODEL_BUCKET}/repnet_model/1, gs://${MY_MODEL_BUCKET}/repnet_model_data/2 etc. For GS locations if you do not store your data below the version number, TensorFlow Serving will not recognize the model data and fail silently.

Now that we know how to tell TensorFlow Serving where to find model data in GS, let’s look at how we might implement this on Kubernetes.

replicas: 3

template:

metadata:

labels:

app: repnet-server

spec:

containers:

- name: repnet-container

image: tensorflow/serving:1.15.0

args:

- --port=8500

- --model_name=repnet

- --model_base_path=gs://GS_LOCATION/repnet_model

env:

- name: GOOGLE_APPLICATION_CREDENTIALS

value: /secret/gcp-credentials/key.json

imagePullPolicy: IfNotPresent

resources:

limits:

memory: "6Gi"

requests:

memory: "3Gi"

volumeMounts:

- mountPath: /secret/gcp-credentials

name: gcp-credentials

ports:

- containerPort: 8500

volumes:

- name: gcp-credentials

secret:

secretName: user-gcp-sa

apiVersion: v1

kind: Service

metadata:

labels:

run: repnet-service

name: repnet-service

spec:

ports:

- port: 8500

targetPort: 8500

selector:

app: repnet-server

type: LoadBalancer

This Kubernetes configuration creates 3 Pods (replicas), each hosting one container (repnet-container). More complicated Pods may host multiple containers, such as an app server paired with a web server in the same Pod.

You can see that I tell TensorFlow Serving where to find the model data. You would need to specify your own GS_LOCATION bucket and path.

My model uses quite a lot of memory so I need to be generous with memory resources.

Also, the eagle-eyed will notice that I set GOOGLE_APPLICATION_CREDENTIALS to a JSON file (key.json) in the container. key.json contains credentials for a service account that has access to the GS bucket.

A Kubernetes secret is used for this file because we want it to be separate from the docker image and mounted at deploy time.

This kubectl command creates the secret.

kubectl create secret generic user-gcp-sa --from-file=key.json=./${SERVICE_ACCOUNT_CREDENTIALS}.json

Note that you will need to have created a GS bucket for your model data, given access to that bucket for a service account, and then exported the account credentials to a JSON file.

SERVICE_ACCOUNT_CREDENTIALS should be the location of that JSON file. This command creates an Opaque Kubernetes secret named user-gcp-sa which allows us to use key.json at deploy time.

The volumes resource creates a Kubernetes volume, gcp-credentials which is then mounted, inside the container configuration. Once mounted, key.json is accessible from within the deployed container.

Note that default Kubernetes secrets are stored in plain text in etcd, Kubernetes Key-Value store. This means someone gaining access to etcd will be able to read your data. However, in GCP all customer data including Kubernetes secrets are encrypted, giving some default protection in GKE. For further protection against attacks, I recommend this Google blog: Exploring container security: Encrypting Kubernetes secrets with Cloud KMS.

Lastly, a LoadBalancer Service is configured to provide external access to our Pods. After deployment GKE will have created an Ingress IP to this load balancer instance. This can be queried with kubectl.

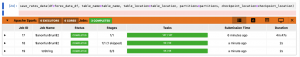

❯ kubectl --namespace="repnet-namespace" get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE repnet-service LoadBalancer <CLUSTER IP> <EXTERNAL IP> 8500:32380/TCP 24h

We see that we may access <EXTERNAL IP>/8500 for RepNet predictions.

Note, you must specify the namespace when using non-default namespaces for all the kubectl commands.

So now, we have a vanilla TensorFlow Serving container accessing model data from GS and authenticating with a GCP service account using a Kubernetes secret.

Using Terraform to manage GKE

There are two main tasks involved in setting a Kubernetes. Setting up the cluster itself, the nodes used for Kubernetes, and then setting up our Pods and Services. I will discuss using Terraform for both tasks.

Setting up the cluster with Terraform

variable "gke_num_nodes" {

default = 5

description = "number of gke nodes"

}

# GKE cluster

resource "google_container_cluster" "primary" {

name = "${var.project_id}-gke"

location = var.region

# We can't create a cluster with no node pool defined, but we want to only use

# separately managed node pools. So we create the smallest possible default

# node pool and immediately delete it.

remove_default_node_pool = true

initial_node_count = 1

}

# Separately Managed Node Pool

resource "google_container_node_pool" "primary_nodes" {

name = "${google_container_cluster.primary.name}-node-pool"

location = var.region

cluster = google_container_cluster.primary.name

node_count = var.gke_num_nodes

node_config {

oauth_scopes = [

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

]

labels = {

env = var.project_id

}

# preemptible = true

machine_type = "n2-standard-4"

tags = ["gke-node", "${var.project_id}-gke"]

metadata = {

disable-legacy-endpoints = "true"

}

}

}

This configuration is saved in a file called gke.tf, in a folder for our cluster configuration, let’s call it gke.

For more detail on setting up clusters on GKE with Terraform (including details on using a VPC) see: https://learn.hashicorp.com/tutorials/terraform/gke?in=terraform/kubernetes

This configuration creates a 5 node GKE cluster using n2-standard-4 machines needed for the container memory requirements. This configuration uses variables defined locally, gke-num-nodes as well as two defined in a separate file, project_id, region. To define variables separately create a file terraform.tfvars in the same directory as the config file. The contents should be something like the below:

project_id = "YOUR PROJECT" region = "YOUR REGTION"

Creating the above folder and files makes the following folder structure:

gke/ gke.tf terraform.tfvars

From inside the gke folder we can provision and start our GKE cluster with the following terraform commands.

terraform init # only run once terraform apply # to apply the .tf configuration

and answering ‘yes’ when prompted.

After running you should see output that confirms successful cluster creation on GKE:

Apply complete! Resources: 1 added, 0 changed, 0 destroyed. Outputs: kubernetes_cluster_host = "CLUSTER HOST IP" kubernetes_cluster_name = "YOUR PROJECT-gke" project_id = "YOUR PROJECT" region = "YOUR REGION"

Where, YOUR PROJECT and YOUR REGION are as set in terraform.tfvars

Deploying the Pods and Services with Terraform

Here we want to create the same configuration we saw at the beginning to create our RepNet Pods and Services. Remember the Pods will host our TensorFlow Serving container. The Services will give access to our Pods. The following Terraform configuration achieves this.

terraform {

required_providers {

google = {

source = "hashicorp/google"

version = "3.52.0"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = ">= 2.0.1"

}

}

}

data "terraform_remote_state" "gke" {

backend = "local"

config = {

path = "../gke/terraform.tfstate"

}

}

# Retrieve GKE cluster information

provider "google" {

project = data.terraform_remote_state.gke.outputs.project_id

region = data.terraform_remote_state.gke.outputs.region

}

# Configure kubernetes provider with Oauth2 access token.

# https://registry.terraform.io/providers/hashicorp/google/latest/docs/data-sources/client_config

# This fetches a new token, which will expire in 1 hour.

data "google_client_config" "default" {}

data "google_container_cluster" "my_cluster" {

name = data.terraform_remote_state.gke.outputs.kubernetes_cluster_name

location = data.terraform_remote_state.gke.outputs.region

}

provider "kubernetes" {

host = data.terraform_remote_state.gke.outputs.kubernetes_cluster_host

token = data.google_client_config.default.access_token

cluster_ca_certificate = base64decode(data.google_container_cluster.my_cluster.master_auth[0].cluster_ca_certificate)

}

resource "kubernetes_namespace" "repnet" {

metadata {

name = "repnet-namespace"

}

}

resource "kubernetes_deployment" "repnet" {

metadata {

name = "repnet-deployment"

namespace = kubernetes_namespace.repnet.metadata.0.name

}

spec {

replicas = 2

selector {

match_labels = {

app = "repnet-server"

}

}

template {

metadata {

labels = {

app = "repnet-server"

}

}

spec {

container {

name = "repnet-container"

image = "tensorflow/serving:1.15.0"

args = concat(["--port=8500", "--model_name=repnet", "--model_base_path=gs://${GS MODEL LOCATION}"])

env {

name = "GOOGLE_APPLICATION_CREDENTIALS"

value = "/secret/gcp-credentials/key.json"

}

image_pull_policy = "IfNotPresent"

resources {

limits = {

memory = "6Gi"

}

requests = {

memory = "3Gi"

}

}

volume_mount {

name = "gcp-credentials"

mount_path = "/secret/gcp-credentials"

}

port {

container_port = 8500

}

}

volume {

name = "gcp-credentials"

secret {

secret_name = "user-gcp-sa"

}

}

}

}

}

}

resource "kubernetes_service" "repnet" {

metadata {

name = "repnet-service"

namespace = kubernetes_namespace.repnet.metadata.0.name

}

spec {

selector = {

app = kubernetes_deployment.repnet.spec.0.template.0.metadata.0.labels.app

}

type = "LoadBalancer"

port {

port = 8500

target_port = 8500

}

}

}

output "lb_ip" {

value = kubernetes_service.repnet.status.0.load_balancer.0.ingress.0.ip

}

Most of this configuration is very similar to the Kubernetes shown at the beginning of this article. Similarly to the cluster creation task above, this configuration should be saved in it’s own folder, say repnet-k8s, in a .tf file, say repnet-k8s.tf.

At the beginning of repnet-k8s.tf we see some code to use the cluster configured from the gke folder.

We then retrieve the project, and region that we configured in the cluster setup step.

At the end of repnet-k8s.tf we see that the Ingress IP (remember this is the IP of the GKE load balancer we use to access Repnet) is output, so we don’t need to do the kubectl get Services command to get the Ingress IP.

To set up and deploy simply cd to the repnet-k8s folder and execute the following commands.

terraform init # only run once terraform apply # to apply the .tf configuration

Summary

Now, we are able to use the Vanilla TensorFlow Serving to serve our models housed in GS. This means we can easily deploy new model versions, with a simple GS upload. This makes development and maintenance easier.

Also because we are using Terraform, creating the cluster and deploying is possible using simple terraform commands. Also, to clean up either the cluster or the deployment simply run terraform destroy from the appropriate folder.

次世代システム研究室では、グループ全体のインテグレーションを支援してくれるアーキテクトを募集しています。インフラ設計、構築経験者の方、次世代システム研究室にご興味を持って頂ける方がいらっしゃいましたら、ぜひ募集職種一覧からご応募をお願いします。

皆さんのご応募をお待ちしています。

グループ研究開発本部の最新情報をTwitterで配信中です。ぜひフォローください。

Follow @GMO_RD