2017.02.11

Multi Datacenter Cassandra on Vagrant with Ansible

Here I introduce a project for testing a multi-datacenter Cassandra setup locally. All files discussed are available for download here: cassandra-cross-dc

Goal

- Create a local simulated multi-datacenter Cassandra cluster on Vagrant for testing various settings.

- It should be easy to setup, teardown and recreate if necessary.

Sub-goals

- Transmissions between data-centers should be SSL encrypted and authenticated.

- Ansible should handle creation and configuration of SSL keys and certificates.

- It should be easy to add nodes to an existing cluster.

Requirements

The attached vagrant file creates 6 vagrant ubuntu instances running Cassandra. You will need a reasonable amount of memory. I had no problems with a MacBook Pro with 16GB memory.

Some Design Decisions

Loose coupling between ansible and vagrant

Vagrant-ansible provisioning allows to create the complete environment just from the vagrant up command, the ansible playbook is called from within the Vagrantfile. This is fine for simple environments, but in this case I wanted the flexibility of running ansible only.

Invoking ansible directly from the command line we can invoke many different types of commands, using ansible tags, adhoc commands etc.

Note that when ansible is run from within the Vagrantfile, vagrant automatically creates an ansible inventory file with the connection properties for each ansible host as shown below.

.vagrant/provisioners/ansible/inventory/vagrant_ansible_inventory

# vagrant created ansible hosts file cassandra_1 ansible_ssh_host=127.0.0.1 ansible_ssh_port=2222 ansible_ssh_user='vagrant' ansible_ssh_private_key_file='/cassandra-cross-dc/.vagrant/machines/cassandra_1/virtualbox/private_key' ...

I used these connection properties in the custom inventory file to allow ansible to seamlessly connect to the vagrant guests.

We use vagrant connection properties with the vagrant private key specified for each node individually.

Vagrant specific configuration is isolated within the inventory file, allowing easy addition of non-vagrant inventories, just add another hosts file.

Ansible tags

Use ansible tags to give more fine-grained control over which tasks get run within the cassandra.yml playbook.

Ansible Group Based Datacenter Configuration

Cassandra nodes are separated into datacenter groups allowing separate configuration per datacenter.

Note on environment configurations

The group_vars in this project are defined only for the current test environment but may be easily extended with the ansible recommended practise of defining individual environments below an inventories directory, see: http://docs.ansible.com/ansible/playbooks_best_practices.html#alternative-directory-layout. In this way production, staging, development environments may be added.

Generated SSL Certificates

Cassandra node certificates are generated at each node then copied into a local directory named using the current timestamp.

In this way previous certificates are not overwritten.

Explanation of Components

The project

The project provided contains the following directories and files:

# project directory structure . ├── Vagrantfile ├── cassandra.yml ├── group_vars │ ├── all.yml │ ├── dc1.yml │ └── dc2.yml ├── hosts ├── restart-cassandra.sh ├── roles │ └── cassandra │ ├── files │ │ └── certs │ │ ├── 20170630113314 │ │ │ ├── cassandra-1.cer │ │ │ ├── cassandra-2.cer │ │ │ ├── cassandra-3.cer │ │ │ ├── cassandra-4.cer │ │ │ ├── cassandra-5.cer │ │ │ └── cassandra-6.cer │ │ ... │ ├── handlers │ │ └── main.yml │ ├── tasks │ │ └── main.yml │ └── templates │ ├── cassandra-rackdc.properties.j2 │ └── cassandra.yaml.j2 └── schema.sql

Vagrantfile

A loop creating 6 Ubuntu Xenial nodes.

Each node’s IP and forwarded ssh port is explicitly configured for easy connection from ansible.

# vagrant file

VAGRANTFILE_API_VERSION = "2"

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

config.vm.box = "bheegu1/xenial-64"

N = 6

(1..N).each do |machine_id|

config.vm.define "cassandra_#{machine_id}" do |machine|

machine.vm.hostname = "cassandra-#{machine_id}"

machine.vm.network "private_network", ip: "192.168.15.#{100+machine_id}"

machine.vm.network :forwarded_port, guest: 22, host: "#{2220+machine_id}", id: 'ssh'

machine.vm.provider "virtualbox" do |v|

v.memory = "1536"

v.customize ["guestproperty", "set", :id, "/VirtualBox/GuestAdd/VBoxService/--timesync-set-threshold", 10000]

end

end

end

end

playbook

cassandra.yml applies the cassandra role to all the Cassandra hosts

Group Vars

Defines data-center specific vs. global configuration.

dc1.yml

# datacenter specific variables (just the data-center name) --- datacenter: dc1

all.yml

# global variables --- # Cassandra configuration cluster_name: "Test Cluster" cassandra_seeds: ["192.168.15.101", "192.168.15.102", "192.168.15.103", "192.168.15.104", "192.168.15.105", "192.168.15.106"] # SSL configurations key_password: "password" keystore_password: "password" truststore_password: "password" ssl_domain: "domain.com" ssl_team: "team" ssl_org: "org" ssl_city: "city" ssl_state: "state" ssl_country: "co" # Ubuntu default python ansible_python_interpreter: /usr/bin/python3

hosts inventory file

- two datacenter groups (dc1, dc2) are defined containing the six vagrant guests

- the cassandra group contains these two groups

- each host has a

listen_addresswhich is the IP Cassandra nodes use to talk with each other - each host has vagrant connection configuration

– ansible_ssh_port: defines a port on localhost that is forwarded to port of 22 of each vagrant guest. This local port is configured within Vagrantfile.

– ansible_ssh_private_key_file this is the vagrant private key file used for the ssh connection

# hosts file [dc1] cassandra-1 listen_address=192.168.15.101 ansible_ssh_host=127.0.0.1 ansible_ssh_port=2221 ansible_ssh_user='vagrant' ansible_ssh_private_key_file='.vagrant/machines/cassandra_1/virtualbox/private_key' ... [dc2] cassandra-4 listen_address=192.168.15.104 ansible_ssh_host=127.0.0.1 ansible_ssh_port=2224 ansible_ssh_user='vagrant' ansible_ssh_private_key_file='.vagrant/machines/cassandra_4/virtualbox/private_key' ... [cassandra:children] dc1 dc2

role

the cassandra role installs and configures cassandra

main.yml

install cassandra

configure cassandra with

– cassandra-rackdc.properties.j2

– cassandra.yaml.j2

– create SSL files on each node

– copy all SSL certs to timestamped directory on local machine

– for each node import all cert files

templates

cassandra.yaml.j2 is the main configuration for Cassandra.

within this file we have:

cluster_name: '{{ cluster_name }}'

– name for the Cassandra cluster

- seeds: "{{ cassandra_seeds | join(',') }}"

– how the nodes initially connect to each other

listen_address: {{ listen_address }}

– the IP on this node that other nodes connect to

server_encryption_options: internode_encryption: dc

– set to use SSL between datacenters

– configure the ssl file locations and passwords

files

– contains cert files that are downloaded from the Cassandra nodes into timestamped directories

handlers

– contains handler to restart Cassandra on a configuration change

restart-cassandra.sh

– slow rolling restart for all Cassandra nodes. Useful to restart Cassandra if nodes fail to start up properly etc.

Some useful adhoc commands

remove all Cassandra data

ansible -i hosts all -m shell -a 'rm -rf /var/lib/cassandra/*' --become

remove all SSL configuration

# remove all SSL conf files ansible -i hosts all -m shell -a 'rm -rf /etc/cassandra/conf/*' --become

# remove all SSL conf files beginning with "." ansible -i hosts all -m shell -a 'rm -rf /etc/cassandra/conf/.*' --become

Note: this command will report errors for failing to remove the directory but they can be safely ignored

Setup

Use the command below to setup the Cassandra nodes defined in the file hosts

ansible-playbook -i hosts cassandra.yml

Testing

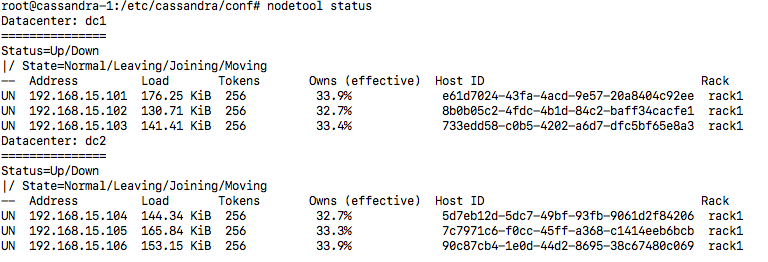

Verify the Cassandra cluster is running with the command below.

nodetool status

This command shows nodes participating in the Cassandra cluster grouped by datacenter.

schema.sql

A Schema for testing

Login to a Cassandra node:

vagrant ssh cassandra_1

Open the Cassandra query shell:

cqlsh 192.168.15.101 -u cassandra -p cassandra

copy and paste sql from schema.sql

ALTER KEYSPACE system_auth WITH replication = {'class': 'NetworkTopologyStrategy', 'dc1': 3, 'dc2': 3};

CREATE KEYSPACE IF NOT EXISTS web

WITH replication = {'class': 'NetworkTopologyStrategy', 'dc1' : 3, 'dc2' : 3};

USE web;

CREATE TABLE IF NOT EXISTS user (

email text,

name text,

PRIMARY KEY(email)

);

Here we define Cassandra topology strategies for system_auth and web. system_auth is used internally by Cassandra, by default it is not enabled on multiple data-centers. This handles authorization for Cassandra users. web is our test schema. The keyspace configs specify a replication factor of 3 per data-center.

Adding a new node

To add a new vagrant box, edit Vagrantfile, increase N to 7.

# vagrant file

N = 7

Edit the hosts inventory file, uncomment cassandra-7

# hosts file cassandra-7 listen_address=192.168.15.107 ansible_ssh_host=127.0.0.1 ansible_ssh_port=2227 ansible_ssh_user='vagrant' ansible_ssh_private_key_file='.vagrant/machines/cassandra_7/virtualbox/private_key'

Add new Cassandra node and import existing nodes’ certificates to that node

ansible-playbook -i hosts cassandra.yml --limit cassandra-7 -e "cert_dir=20170630113314"

- Tell ansible to only run on the new node cassandra-7

--limit cassandra-7 - Tell ansible the certificates we wish to import to our new node

cert_dir=20170630113314

Note: you will need to specify your certificate directory here.

Import new nodes certificate to existing nodes

ansible-playbook -i hosts cassandra.yml --limit 'cassandra:!cassandra-7' -e "cert_dir=20170630124622" --tags upload_certs

This command uses the --limit option to tell ansible to run on all nodes besides cassandra-7.

cert_dir=20170630124622 is the directory containing the certificate file for cassandra-7 we want to import.

We specify the tag --tags upload_certs to tell ansible to run tasks to upload and import the certificate file.

Summary

Use this setup for testing multi-datacenter Cassandra configuration.

Setup a local Cassandra simulated multi-datacenter environment easily. You may also further extend it by adding new non-local environments as explained in the section “Note on environment configurations”. If you read this far, thanks for reading and good luck!

Download Files -> cassandra-cross-dc

次世代システム研究室では、グループ全体のインテグレーションを支援してくれるアーキテクトを募集しています。インフラ設計、構築経験者の方、次世代システム研究室にご興味を持って頂ける方がいらっしゃいましたら、ぜひ 募集職種一覧 からご応募をお願いします。

皆さんのご応募をお待ちしています。

グループ研究開発本部の最新情報をTwitterで配信中です。ぜひフォローください。

Follow @GMO_RD